Appsync VTL JS Pipeline Resolvers

1 Summary

PipelineResolverStart() ==> funcA(ctx) ==> funcB(ctx) ==> funcC(ctx) ==> PipelineResolverEnd(ctx)- Pipeline resolver functions(PipelineResolverStart,PipelineResolverEnd) wrap a chain of composable request/response functions (funcA,funcB,funcC).

funcA(ctx)can accessPipelineResolverStart()output withctx.prev.resultfuncB(ctx)can accessfuncBoutput withctx.prev.resultPipelineResolverEnd(ctx)can accessfuncCoutput withctx.prev.result

WARN: Despite know that ALL functions in the chain have access to the graphql input with ctx.arguments

2 ctx object

ctx.stashis like golang context or react useContext hookutil.errorused to throw an error and stop the chain of functionsutil.appendErrorused to throw an error without stopping the chain of functions

3 VTL vs JS

https://docs.aws.amazon.com/appsync/latest/devguide/resolver-reference-overview-js.html

## [Start] Mutation Update resolver. **

#set( $args = $util.defaultIfNull($ctx.stash.transformedArgs, $ctx.args) )

## Set the default values to put request **

#set( $mergedValues = $util.defaultIfNull($ctx.stash.defaultValues, {}) )

## copy the values from input **

$util.qr($mergedValues.putAll($util.defaultIfNull($args.input, {})))

## set the typename **

## Initialize the vars for creating ddb expression **

#set( $expNames = {} )

#set( $expValues = {} )

#set( $expSet = {} )

#set( $expAdd = {} )

#set( $expRemove = [] )

#if( $ctx.stash.metadata.modelObjectKey )

#set( $Key = $ctx.stash.metadata.modelObjectKey )

#else

#set( $Key = {

"id": $util.dynamodb.toDynamoDB($args.input.id)

} )

#end

## Model key **

#if( $ctx.stash.metadata.modelObjectKey )

#set( $keyFields = ["_version", "_deleted", "_lastChangedAt"] )

#foreach( $entry in $ctx.stash.metadata.modelObjectKey.entrySet() )

$util.qr($keyFields.add("$entry.key"))

#end

#else

#set( $keyFields = ["id", "_version", "_deleted", "_lastChangedAt"] )

#end

#foreach( $entry in $util.map.copyAndRemoveAllKeys($mergedValues, $keyFields).entrySet() )

#if( !$util.isNull($ctx.stash.metadata.dynamodbNameOverrideMap) && $ctx.stash.metadata.dynamodbNameOverrideMap.containsKey("$entry.key") )

#set( $entryKeyAttributeName = $ctx.stash.metadata.dynamodbNameOverrideMap.get("$entry.key") )

#else

#set( $entryKeyAttributeName = $entry.key )

#end

#if( $util.isNull($entry.value) )

#set( $discard = $expRemove.add("#$entryKeyAttributeName") )

$util.qr($expNames.put("#$entryKeyAttributeName", "$entry.key"))

#else

$util.qr($expSet.put("#$entryKeyAttributeName", ":$entryKeyAttributeName"))

$util.qr($expNames.put("#$entryKeyAttributeName", "$entry.key"))

$util.qr($expValues.put(":$entryKeyAttributeName", $util.dynamodb.toDynamoDB($entry.value)))

#end

#end

#set( $expression = "" )

#if( !$expSet.isEmpty() )

#set( $expression = "SET" )

#foreach( $entry in $expSet.entrySet() )

#set( $expression = "$expression $entry.key = $entry.value" )

#if( $foreach.hasNext() )

#set( $expression = "$expression," )

#end

#end

#end

#if( !$expAdd.isEmpty() )

#set( $expression = "$expression ADD" )

#foreach( $entry in $expAdd.entrySet() )

#set( $expression = "$expression $entry.key $entry.value" )

#if( $foreach.hasNext() )

#set( $expression = "$expression," )

#end

#end

#end

#if( !$expRemove.isEmpty() )

#set( $expression = "$expression REMOVE" )

#foreach( $entry in $expRemove )

#set( $expression = "$expression $entry" )

#if( $foreach.hasNext() )

#set( $expression = "$expression," )

#end

#end

#end

#set( $update = {} )

$util.qr($update.put("expression", "$expression"))

#if( !$expNames.isEmpty() )

$util.qr($update.put("expressionNames", $expNames))

#end

#if( !$expValues.isEmpty() )

$util.qr($update.put("expressionValues", $expValues))

#end

## Begin - key condition **

#if( $ctx.stash.metadata.modelObjectKey )

#set( $keyConditionExpr = {} )

#set( $keyConditionExprNames = {} )

#foreach( $entry in $ctx.stash.metadata.modelObjectKey.entrySet() )

$util.qr($keyConditionExpr.put("keyCondition$velocityCount", {

"attributeExists": true

}))

$util.qr($keyConditionExprNames.put("#keyCondition$velocityCount", "$entry.key"))

#end

$util.qr($ctx.stash.conditions.add($keyConditionExpr))

#else

$util.qr($ctx.stash.conditions.add({

"id": {

"attributeExists": true

}

}))

#end

## End - key condition **

#if( $args.condition )

$util.qr($ctx.stash.conditions.add($args.condition))

#end

## Start condition block **

#if( $ctx.stash.conditions && $ctx.stash.conditions.size() != 0 )

#set( $mergedConditions = {

"and": $ctx.stash.conditions

} )

#set( $Conditions = $util.parseJson($util.transform.toDynamoDBConditionExpression($mergedConditions)) )

#if( $Conditions.expressionValues && $Conditions.expressionValues.size() == 0 )

#set( $Conditions = {

"expression": $Conditions.expression,

"expressionNames": $Conditions.expressionNames

} )

#end

## End condition block **

#end

#set( $UpdateItem = {

"version": "2018-05-29",

"operation": "UpdateItem",

"key": $Key,

"update": $update,

"_version": $util.defaultIfNull($args.input["_version"], 0)

} )

#if( $Conditions )

#if( $keyConditionExprNames )

$util.qr($Conditions.expressionNames.putAll($keyConditionExprNames))

#end

$util.qr($UpdateItem.put("condition", $Conditions))

#end

$util.toJson($UpdateItem)

## [End] Mutation Update resolver. **4 demo

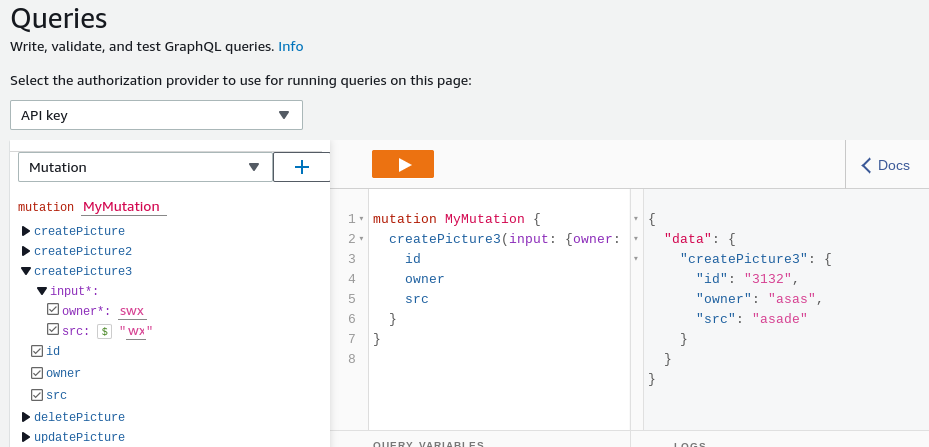

We will be demoing the below graphql schema

type Picture {

id: ID!

owner: ID!

src: String

}

input CreatePictureInput {

owner: ID!

src: String

}

type Mutation {

createPicture2(input: CreatePictureInput!): Picture

createPicture3(input: CreatePictureInput!): Picture

}using psuedo haskell

createPicture3 :: (id:ID! × src:String) -> (id:ID! × owner:ID! × src:String)

4.1 MVP example

The simplest example to understand how pipeline resolver work.

There are no chain of functions this pipeline resolver wraps.

import {util} from '@aws-appsync/utils';

export function request(ctx) {

return {"id":3132,"owner":"asas","src":"asade"};

}

export function response(ctx) {

return ctx.prev.result;

// ctx.prev.result := {"id":3132,"owner":"asas","src":"asade"}

}

4.2 emulating a graphQL query with test context

Must use Cloudwatch logging to make any use of this.

AppsyncApp >> Settings >> Logging >> Include Verbose content

- CRITICISM: The test assumes each function is completely independent of the function chain.

- cannot be used to test an entire chain.

- fill in

"arguments": {"input": ...}to testrequest(ctx) - fill in

"result": {...}to testresponse(ctx)

{

"arguments": {

"input": {

"owner": "bob",

"src": "ross"

}

},

"source": {},

"result": {

"id":32,

"owner":"bill",

"src":"as"

},

"request": {},

"prev": {}

}5 DO NOT FETCH Data Source via Resolver

DO NOT USE PIPELINE RESOLVER TO FETCH DYNAMODB

Pipeline Resolvers DO NOT have the ability to access Data Sources of anykind.

- YOU MUST USE A FUNCTION to fetch from a data source, and the UI gives an option to bind to a dynamoDB table.

- The results of DynamoDB fetch gets auto-resolved from DynamoDB datatype syntax like

"id": {"S": "person"}=>id : "person"

5.1 MVP of function autotranslate DynamoDB query

- When a function uses dynamoDB as a query it’s

response(ctx)will autotranslate DynamoDB type format into compatible graph query format

"id": {"S": "person"}=>id : "person"

//remember to select data source as your dynamoDB table

//we named our function `unit`

import {util} from '@aws-appsync/utils';

export function request(ctx) {

const {input: values} = ctx.arguments

const id = util.autoId();

return {

"version": "2018-05-29",

operation: 'PutItem',

key: util.dynamodb.toMapValues({"id":id}),

attributeValues: util.dynamodb.toMapValues(values),

};

}

export function response(ctx) {

return ctx.result

}//notice you dont even get an option to select data source

export function request(ctx) {

return {};

}

export function response(ctx) {

return ctx.prev.result;

}In cloud watch you should see the result below

PipelineResolver => unit function request => unit function response => PipelineResolver

"logType": "BeforeRequestFunctionEvaluation",

...

"context": {

"arguments": {

"input": {

"owner": "gv",

"src": "vhv"

}

},

"stash": {},

"outErrors": []

},

"fieldInError": false,

"evaluationResult": {},

"errors": [],"logType": "RequestFunctionEvaluation",

"functionName": "unit",

...

"evaluationResult": {

"version": "2018-05-29",

"operation": "PutItem",

"key": {

"id": {

"S": "52216404-ff5b-40b2-9b70-a7c62c9f80bd"

}

},

"attributeValues": {

"owner": {

"S": "gv"

},

"src": {

"S": "vhv"

}

}

},

"parentType": "Mutation",

"path": [

"createPicture2"

],

"requestId": "2d30f54c-3d38-4d29-a355-7e49b13c1ee8",

"context": {

"arguments": {

"input": {

"owner": "gv",

"src": "vhv"

}

},

"prev": {

"result": {}

},

"stash": {},

"outErrors": []

},

"errors": [],"logType": "ResponseFunctionEvaluation",

"functionName": "unit",

...

"evaluationResult": {

"owner": "gv",

"src": "vhv",

"id": "52216404-ff5b-40b2-9b70-a7c62c9f80bd"

},

"parentType": "Mutation",

"path": [

"createPicture2"

],

"requestId": "2d30f54c-3d38-4d29-a355-7e49b13c1ee8",

"context": {

"arguments": {

"input": {

"owner": "gv",

"src": "vhv"

}

},

"result": {

"owner": "gv",

"src": "vhv",

"id": "52216404-ff5b-40b2-9b70-a7c62c9f80bd"

},

"prev": {

"result": {}

},

"stash": {},

"outErrors": []

},

"errors": [],"logType": "AfterResponseFunctionEvaluation",

...

"context": {

"arguments": {

"input": {

"owner": "gv",

"src": "vhv"

}

},

"result": {

"owner": "gv",

"src": "vhv",

"id": "52216404-ff5b-40b2-9b70-a7c62c9f80bd"

},

"prev": {

"result": {

"owner": "gv",

"src": "vhv",

"id": "52216404-ff5b-40b2-9b70-a7c62c9f80bd"

}

},

"stash": {},

"outErrors": []

},

"fieldInError": false,

"evaluationResult": {

"owner": "gv",

"src": "vhv",

"id": "52216404-ff5b-40b2-9b70-a7c62c9f80bd"

},

"errors": [],- lesson of the story is

- you CANNOT data fetch from pipeline resolver

- you MUST use functions to fetch data sources

- functions will auto convert dynamoDB type format

6 Translate VTL to JS

- vtl:

#util.qr()==> not needed in js- it is used to run vtl statements while suppressing statement output; js doesn’t output statement by default

- vtl:

util.toJson({})==>JSON.parse('{}')in js - vtl:

$ctx.args.input.keySet()==>Object.keys(ctx.args.input)in js